This advanced course in the Linear Algebra Specialization focuses on the theory and computations arising from working with orthogonal vectors. It explores concepts such as orthogonal transformation, orthogonal bases, and symmetric matrices, emphasizing their applications in AI and machine learning. The skills and techniques acquired are crucial for interpreting, training, and utilizing external data in these fields, making the course a valuable asset for students pursuing advanced studies in data science, AI, and mathematics.

The course is divided into four modules:

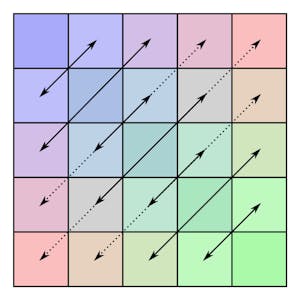

Module 1 delves into inner product, length, and orthogonality, exploring distance and angles between vectors and providing practice exercises to reinforce learning.

Module 2 covers orthogonal projections, the Gram-Schmidt process, and least-squares problems, offering practical insights into finding orthogonal bases and solutions.

Module 3 delves into the theory of symmetric matrices and quadratic forms, providing in-depth understanding and practice exercises in these areas.

The final module culminates in an assessment to evaluate the comprehensive understanding of the course material.

Certificate Available ✔

Get Started / More Info

This course consists of four modules covering topics such as orthogonality, orthogonal projections, least squares problems, symmetric matrices, and quadratic forms, culminating in a comprehensive final assessment.

Module 1 delves into inner product, length, and orthogonality, exploring distance and angles between vectors and providing practice exercises to reinforce learning.

Module 2 covers orthogonal projections, the Gram-Schmidt process, and least-squares problems, offering practical insights into finding orthogonal bases and solutions.

Module 3 delves into the theory of symmetric matrices and quadratic forms, providing in-depth understanding and practice exercises in these areas.

The final module culminates in an assessment to evaluate the comprehensive understanding of the course material.

Learn machine learning with TensorFlow on Google Cloud in Japanese, covering topics such as model building, training, and deployment, as well as responsible AI practices....

Learn to create a professional wordcloud from a text dataset using NLP and TF-IDF in Python. Clean, lemmatize, and calculate TF-IDF weights to visualize important...

This hands-on project on Image Super Resolution using Autoencoders in Keras provides practical training to enhance image quality with neural networks.

Learn to create, train, and evaluate a neural network model using Keras with TensorFlow to predict house prices with high accuracy in this 2-hour project-based course....