This comprehensive course, "Distributed Computing with Spark SQL," offered by the University of California, Davis, is designed for students with SQL experience who are ready to tackle big data challenges through distributed computing using Apache Spark.

Throughout the four modules, students will gain a thorough understanding of Spark architecture, Spark SQL, and the fundamentals of data analysis. The course covers how to combine data with advanced analytics at scale and in production environments, building reliable data pipelines, and optimizing Spark SQL. Students will use the collaborative Databricks workspace to write scalable Spark SQL code, inspect the Spark UI for query performance analysis, and create end-to-end pipelines for data transformation and storage. The course also delves into building medallion lakehouse architecture with Delta Lake for ensuring data reliability, scalability, and performance.

Upon completion, students will have honed their SQL and distributed computing skills, making them adept at advanced analysis and paving the way for a transition to more advanced analytics as Data Scientists.

Certificate Available ✔

Get Started / More Info

Dive into distributed computing with Spark SQL in this comprehensive big data course. Master SQL on Spark, build reliable data pipelines, and learn to optimize Spark SQL queries for advanced analytics.

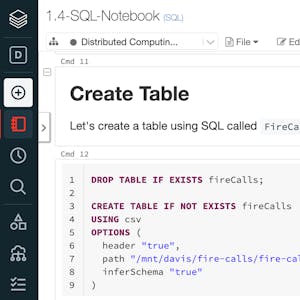

This module introduces Spark and the Databricks environment, covering how Spark distributes computation and Spark SQL. It also includes a course introduction, the importance of distributed computing, working with Spark DataFrames, and importing data. Students will have the opportunity to practice queries in Spark SQL and complete quizzes to reinforce their learning.

Module 2 delves into the core concepts of Spark, such as storage vs. compute, caching, partitions, and troubleshooting performance issues via the Spark UI. It also covers new features in Apache Spark 3.x, including Adaptive Query Execution. Students will engage in practical assignments and quizzes to solidify their understanding.

The third module focuses on engineering data pipelines, including connecting to databases, working with schemas and data types, understanding file formats, and writing reliable data. Students will practice building data pipelines and complete quizzes to test their knowledge.

Module 4 explores data lakes, data warehouses, and lakehouses. Students will learn about Delta Lake and its advanced features, and engage in a demo to understand its application. The module also covers continuing with Spark and data science, providing a comprehensive course summary and time for self-reflection.

This comprehensive course equips aspiring data science leaders with the essential skills to assemble and manage successful data science teams, navigate the data...

Discover the application of data analysis in business using R programming. Gain insights into customer purchasing trends during the pandemic and learn to make strategic...

This course offers a comprehensive exploration of MongoDB's Aggregation Framework, schema design, and machine learning capabilities, providing essential knowledge...

Using Custom Fields in Looker Explores allows non-developer users to create ad hoc fields for richer data analysis independently.